Geometry & Shader Basics - Geometry in Code

We are going to create a few game classes as we progress

through the this each covering a certain area.

We have an idea of what geometry is now and some structures

that are used in MongGame to help represent it on screen, we are now going to

look at the code required to create geometry.

You might be asking yourself, why are we creating our own

geometry to render in code, why don’t we just import a mesh and use that, well,

we can, we could just load a mesh and call it’s Draw method, but that’s not

going to show you what’s going on under the hood. By creating your own vertex

data and sending it to the GPU you will have a better understanding of what’s

going on.

We are going to look at creating the transform matrixes we

need as well as a camera to view the geometry first, along with a base class to then

create and draw geometry as well as a camera to view the rendred geometry.

We are also going to use the built in MonoGame effect BasicEffect. We can then look at how the geometry is created and rendered without having to look at custom shaders at this point.

I am going to be refencing the code in the companion git repository, which can be found here. You will need Visual Studio 2022 and MonGame configured. If your unsure of how to do that, please see the MonoGame getting started guide here.

In the project you will see that I have used a few interfaces, I am not going to go into detail about them as I want to focus as much as I can on the classed and code required for creating and rendering the geometary.

The Transform

Moving, scaling (making an object bigger or smaller) and

rotating an object in the game world is called a Transform, we are altering the

objects fundamental properties, its positon and/or its scale, and/or its

rotation in the world. In order to do this we use a structure called a Matrix.

A Matrix is a NxN (in thins case 4x4) grid of values (floating point numbers) that hold the objects position, its scale and

its rotation. We can transform objects from one world space into another by

multiplying these matrices together (for example a sword in a hands matrix would need to be multiplied by the hands world matrix to stay in position in the hand), but for the purpose of this book, we are

going to stick to a single matrix per set of geometry. I will touch on some

Matrix math later in the Maths section.

As we know, our points in space, our vertices also have

position and direction, the transform will help us move, rotate and scale them

all at the same time, the transform, is the root of all the vertex data.

We are going to create a class called Transform to store

all this information for us and put that information into a Matrix that can

then be used to position, scale and rotate our geometry in the game world.

Scale

This represents the size of our geometry in the world and we

store it in a Vector3, so 3 floating point values one for each axis, X, Y and

Z.

Position

This represents the position of the geometry in the world,

again using a Vector3, and again a value per axis.

Rotation

This represents the geometry’s rotation in the world, a Vector3 could be used for this, but I like to use Quaternions. A quaternion is quite a deep topic in its own right and I fear I am not able to give it the in depth attention it needs. Ill cover this a bit more in the Maths section.

The World Matrix

This is the result of the three elements brought together

into the transform matrix. This is done by creating a scale Matrix, and

multiplying that by a rotation matrix and then multiplying that by a

translation matrix.

This is the first of the transform matrices we discusses

earlier, this matrix will be used to move our vertex data from object space

into world space, this is simply done by multiplying the vertex position by

this world matrix.

In the repo, look in the Models folder and you will find the Transform class. It has the properties:-

- Vector3 Scale

- Vector3 Position

- Quaternion Rotation

- Matrix World.

The first three properties are used to create the World Matrix, this matrix is used to move our vertex postions from object space to world space.

The World matrix property calculates it’s self on the fly by

multiplying together 3 matrices created from our three key properties, Scale,

Rotation and Position, there multiplication order here is important.

Note, I have created an interface for our Transform class called ITransform, this can be found in the Interfaces folder.

The Camera

In order for us to render our geometry we will need a camera

in to be able to see it in the game world. The camera will be our perspective

on the world, where we are, what direction we are looking in. Our camera will

also need a Transform for this information, as well as a field of view, near

and far clipping planes (these are the near and far the camera can see), it will also need a Projection and a View matrix that will

help transform the geometry’s transform into screen space, in other words, render

it in the camera.

We will cover how this is done when we come to write our own

shaders, for now, we will use them along with BasicEffect so we can start

creating some geometry and rendering it on the screen.

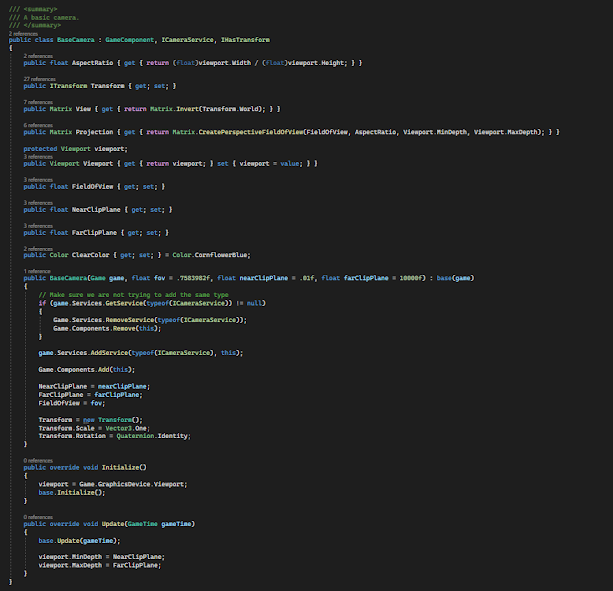

You will find the BaseCamera class Models/Cameras folder. It has these pertinent properties:-

- ITransform Transform

- Matrix View

- Matrix Projection

As you can see, the View matrix is the cameras World matrix inverted (obtained from the Cameras Transform property), this is done as we want to move World space data into the space around the viewer, not into the viewer’s actual space, into View Space.

The Projection matrix is created using a built in function given by the MonoGame Matrix structure, CreatePerspectiveFieldOfView, we pass this a field of view (Effectivly Pi/4 if you look at the constructor default, the AspectRatio, this is calculated from the view port height and width (screen size, in this case) and the min and max clip planes. This matrix will then move objects in View Space to Screen Space. We will see this when we start looking at creating our own shaders.

Other Base Classes

BaseGame

Here we will put all our camera movement and quit functionality, we can then create a game class per chapter, but they all get this code without us duplicating it. Again, in the Models folder you should find BaseGame.cs.

In here we have access to our camera and using the keyboard we can move it's position and rotate it.

GeometryBase<T> Class

With this abstract base class for our geometry, we can then derive from it and create any geometry we want to and apply any effect (shader) we want, using any vertex structure we wish to use.

For now, we are only going to concern ourselves with the following properties:-

- List<Vecror3> Vertices

- List<Color> Colors

- List<int> Indicies

The GeometryBase class needs to be initialized with a type that implements the IVertexType interface and it derives from DrawableGameComponent. The first vertex type we are going to use is the VertexPositionColorTexture, so our first incarnation of this class will focus on this vertex type.

We have a number of lists to store the various vertex

elements we need to render out geometry, a list of Vector3’s for the position

data (I named t verticies), a list of Vector2’s to store the texture coordinate

data and a list of Color’s for the colour data.

We have not discussed indices yet, and it is quite an

important part of rendering any geometry. The index is the order in which each

of the vertices are drawn, this is super important because if they are drawn in

the wrong way you will get some very odd results. We will have a look at that

when we create our first geometry…

We also have a ICameraService property that will return the camera service, an ITransform a List of the generic type specified in the class definition, a Texture2D that if left unpopulated is initialized as a single white pixel, an Effect instance and an array of shorts to be used for the index.

Our First Geometry

We will start by setting up some more geometry classes, this will save us having to create the same data for the shapes we are going to use going forward. We are going to create a triangle, a quad (flat square) and a cube.

Again, we will add to these later, but for now, we will set them up to use our first vertex type.

GeometryTriangleBase<T>

As you can see, we are deriving from GeometryBase<T>

and all we are doing is adding the data required for a triangle.

The first vertex is the top of our triangle, positioned in

object space .5f above the centre of the object, if the color isn’t set it’s given the colour

white and a texture coordinate that positons it at the top centre of any

texture that is used on it. A UV of [.5,.5] is the center of a texture, we will cover this in more detail later.

The second vertex is the bottom right point of our triangle,

positioned bellow and to the right of the object centre by .5f on each axis, a

texture coordinate of the bottom right corner of any texture used on it.

The last vertex is the bottom let point of our triangle,

positioned bellow and to the let of the object centre by .5f o each axis, and

the bottom left texture coordinate for any texture used on it.

We then set the drawing index in order that the vertices are

0, 1 then 2.

Let’s now create a class that will implement this triangle using the VertexPositionColorTexture vertex type.

TriangleBasicEffect Class

This class, as I am sure you can guess from its name is going to create a Triangle for us, and it is going to use the built in shader that comes with the framework, BasicEffect.

This file can be found in Models/BasicEffect

As you can see in our LoadContent method, we load our Effect

with an instance of BasicEffect, then, sets the colours of our verticies, the

first red, the secong blue and the third green, then calls the base.LoadContent

that will to populate the vertexArray with the three vertices we need to draw

our triangle.

In our SetEffect method, we populate the BasicEffect

properties with the relevant transform matrices that it can then use to move

our vertex data into world space, then view space and finally into screen space

to allow or camera to see the triangle rendered on screen. Notice that we have

set VertexColorEnabled to true, if we don’t then the colours we set in the

vertex are ignored by BasicEffect. The same goes for TextureEnabled or textures

will be ignored by BasicEffect.

In our draw call, all we now need to do is call the

SetEffect method before we then send the vertex data to the GPU and have it

rendered.

We are using the GraphicsDevice method DrawUserIndexedPrimitives, this allows us to send a list of triangles (primatives) in this case a list of 1 triangle stored in our vertex array, we can offset the starting vertex, but we don’t want to in this call so we have used the value 0, the start of the list, we tell it how many vertices are in our list, along with the index array, the index offset (again 0 here) and then finally the number of primatives (triangles) to draw.

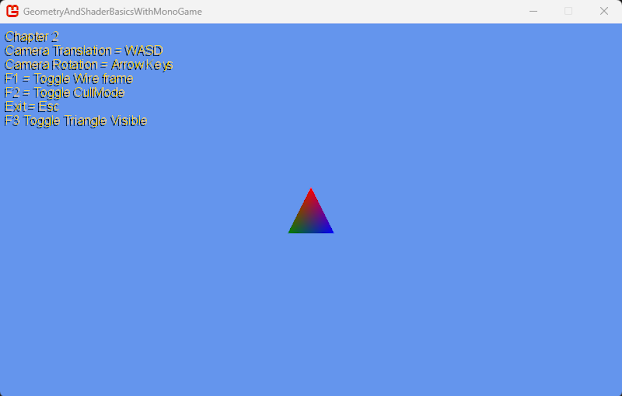

Render Triangle Basic Effect

All we need to do now is add our TriangleBascEffect to a new Game class and run the code. I have created a Game_Chapter_2 class. It simply derives from our abstract BaseGame class, adds our new TriangleBasicEffect and some simple functionality for hiding the render.

Now, all we need to do is alter Program.cs to run this game class and we can run it.

Awesome! We can see each of the vertices are coloured as we specified, but you will also notice that the colours gradually fade from one vertex to the next, this is due to linear interpolation in the shader which we will cover later, but it means that as the GPU renders pixels inside our triangle, the color data it has, is from the vertex itself, so it has to interpolate the colour from one vertex to the next.

We can move the camera around our triangle, view it in wireframe mode as well as switch off backface culling, both things we will come to later, but we have our first geometry up on the screen at last. The next few will be much easier to do now we have some base line code in place.

CullMode

What happens if we go behind our triangle and have a look at

it there?

For those of you that didn’t have a look, the triangle

disappears!!

So, the reason why the triangle vanishes when we look at the

back of it is because of the CullMode. The cull mode is a smart way for the GPU

to throw away triangles that are facing away from the camera and so it does not

have to render them. Imagine if we were rendering a cube, why would we want the

GPU wasting time on the triangles on the other side of the cube that are facing

away from us and we will never see? It would be a waste of GPU cycles to render

them.

For this example, we could tell the GPU to not cull any triangles, we can then go behind our triangle and still be able to see it.

In our BaseGame if we hit F2 we can toggle the CullMode, switching it off and back on, while looking at the back of the vanished triangle, hit F2, the triangle will now be rendered, effectivly, the GPU is rendering the triangle twice in this mode, regardless of if it can be seen by the camera or not. This is not the most efficient thing to do, but can be useful in some cases.

What has this got to do with the index?

If we left the CullMode as CullClockwiseFace, rather than us

having to move around to the back of the triangle to see it, we could just

revers the index array so that it drew them in the order 2, 1, 0, or draw them

0, 2, 1. This is called the winding order, the order in which the vertices are

drawn tells the GPU which vertices are to be culled.

The winding order clue is in the culling mode name, by default MonoGame uses CullCounterClockwiseFace, so drawing the vertices in a counter clockwise order, tells the GPU to cull anything drawn not counter clockwise and seen from the “back”, CullClockwiseFace tells the GPU to cull anything not drawn clockwise and seen from the “back”.

If we set the index for our triangle to be 0,1,2,0,2,1 it would draw both sides of the trianlge regardless of culling mode.

Let’s now put all our code back as it was, the index as 0, 1, 2 and create some more geometry to render.

GeometryQuadBase<T> Class

Let’s make something a little more complicated, we would

rarely use a single triangle to represent something on the screen, a popular

shape used is a Quad, quite often used for billboards and particle effects, the

quad will probably be the most used shape in most games.

We are going to use our GeometryBase class again, and create a new class called QuadBasicEffect, again, we are, for now just using the built in BasicEffect, but we still need this basd class for the shape.

You can see we now have four vertices, one for each corner

of our quad, and six indices, three for each triangle that makes up the two

triangles to render the quad.

We will now create a class to implement our Quad using BasicEffect.

QuadBasicEffect Class

All we have had to do is alter the content of the vertex

array and that’s it.

Again we have coloured each vertex individually, top left is

red, top right is blue, bottom right is green and bottom right is yellow.

Render QuadBasicEffect

All we need to do, is replace out TriangleBasicEffect

instances with QuadBasicEffect instances and we can see our quad rendered on

the screen.

Again we can see our vertex colours interpolated across the

entire mesh created by the two triangles we set out in our code. We can

actually see the triangles rendered by making a change to the graphics device

again.

Our first triangle drawn is the top right hand one, the

second is the bottom left hand triangle.

The BasicEffect shader is rendering the texture and multiplying the colour by the vertex colours we have set. The black sections are the areas of the texture that have no colour or alpha set, so are effectively black. We are not doing any alpha blending at the moment or those areas would show up see through, again something we will look at when writing our own shaders.

GeometryCubeBase<T> Class

We have been playing around creating two dimensional geometry, we are now going to turn our hand to three dimensions, a cube. All we need to do, is generate the six faces of the cube, so that will effectively be six quads, each having for vertices and so two triangles each.

CubeBasicEffect Class

Render CubeBasicEffect

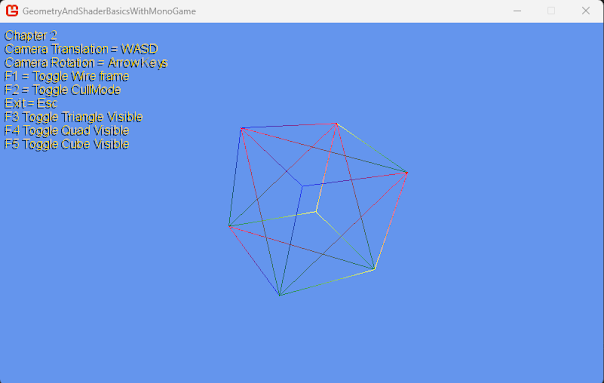

Creating an instance of CubeBasicEffect in our code and

running it gives us something like this.

If we switch Fill mode to WireFrame and CullMode to None we

can see all the triangles as they are rendered.

Put the cull mode back to CullCounterclockwiseFace and we

will only see the triangles facing us.

Pretty neat eh.

I think we now have an understanding of how vertices

represent geometry on screen now, that their data needs to be transformed from

Object Space, through World Space and into View and Screen space in order for

them to be rendered on the screen, but how that is done has not yet been

covered and we will have a look at that when we start writing our own shaders.

Next we are going to have a look at the graphics pipeline, again a subject in its own right that could have a whole book dedicated to it (a bit like the maths). We are going to cover enough so we know the order data is processed and how it then comes out the GPU and on to our screen.

Last Chapter: Geometry, what is it?

Next Chapter: The Graphics Pipeline

As ever, thanks again for your time here, comments on thise post are more than welcome...

Note from me, I think I need to re write this page, feel I should go into more detail regarding the base classes. I think at the time of writing the first draft, I just wanted to get to rendering some geometry and so, this feels a bit rushed.

Comments

Post a Comment